Temporal Difference

- Temporal Difference

- Intro model-free

- Prediction vs Control

- Monte-Carlo Learning

- Temporal-Difference (TD) Learning

- SARSA

- Q-learning

- Eligibility Traces

Intro model-free

- It learns only values like

- instead of

- (which would be model-based)

Prediction vs Control

- The Behaviour policy (used to act) can be either the same (on-policy) or

different (off-policy) with the

- estimation policy (the one that is being evaluated; prediction problem)

- target policy (greedy policy being evaluated and improved; control).

Methods:

- Monte-Carlo prediction/control methods

- Temporal Difference learning (prediction)

- On-policy SARSA, off-policy Q-Learning (control) algorithms

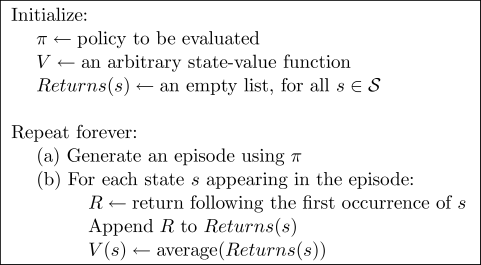

Monte-Carlo Learning

- For learning how good one policy is

- Will only update believe after whole episode

Temporal-Difference (TD) Learning

- vs MC: also update during episode

TD methods learn their estimates in part on the basis of other estimates. They learn a guess from a guess–they bootstrap.

SARSA

- State-Action-Reward-State’-Action’

- on-policy

This update is done after every transition from a nonterminal state . If is terminal, then is defined as zero. This rule uses every element of the quintuple of events, , that make up a transition from one state-action pair to the next. This quintuple gives rise to the name Sarsa for the algorithm.

Excourse: ε-Greedy Policy

with probability 1-ε:

with probability ε:

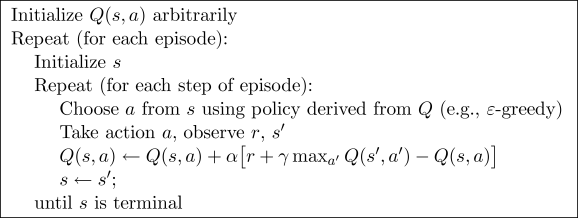

Q-learning

- off-policy

- it is no necessary to learn with the policy you will use

Q-larning vs SARSA

(On the cliff walking example)

After an initial transient, Q-learning learns values for the optimal policy, that which travels right along the edge of the cliff. Unfortunately, this results in its occasionally falling off the cliff because of the $\varepsilon $-greedy action selection. Sarsa, on the other hand, takes the action selection into account and learns the longer but safer path through the upper part of the grid. Although Q-learning actually learns the values of the optimal policy, its on-line performance is worse than that of Sarsa, which learns the roundabout policy. Of course, if $\varepsilon $ were gradually reduced, then both methods would asymptotically converge to the optimal policy.

Eligibility Traces

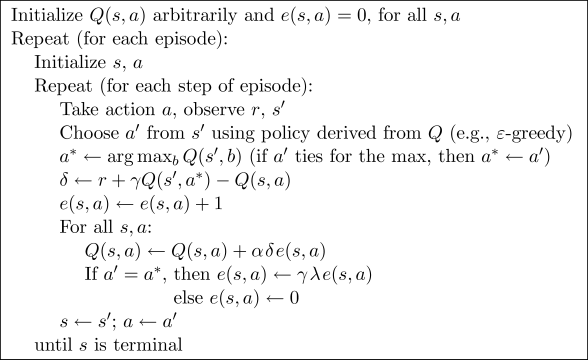

Sarsa(λ)

One-step Sarsa and Sarsa(λ) are on-policy algorithms, meaning that they approximate $q_\pi(s, a)$, the action values for the current policy, π, then improve the policy gradually based on the approximate values for the current policy. The policy improvement can be done in many different ways, as we have seen.

Q(λ)

Q($\lambda $) does not look ahead all the way to the end of the episode in its backup. It only looks ahead as far as the next exploratory action.